IP network management

Liisa Uosukainen - Tuomas Lilja - Lasse Metso -

Stiina Ylänen - Seppo

Ihalainen - Jouni Karvo - Ossi Taipale

| ACL |

Access Control List |

|

ADSL |

Asymmetric Digital Subscriber Line |

|

AH |

Authentication Header |

| API |

Application Programming Interface |

|

ASN.1 |

Abstract Syntax Notation One |

|

B-ISDN |

Broadband ISDN |

|

BN |

Bayesian Networks |

|

BOOTP |

Bootstrap Protocol |

|

CAC |

Connection Admission Control |

|

CATV |

Cable television |

|

CBR |

Case-Based Reasoning |

|

CCB |

Customer Care and Billing |

|

CIM |

Common Information Model |

|

CMIP |

Common Management Information Protocol |

|

CNM |

Customer Network Management |

|

CORBA |

Common Object Request Broker Architecture |

|

CoS |

Class of Service |

|

CPN |

Customer Premises Network |

|

DDR |

Dynamic Document Review |

|

DEN |

Directory Enabled Network |

|

DHCP |

Dynamic Host Configuration Protocol |

|

DiffServ |

Differentiated Services |

|

DMTF |

Distributed Management Task Force Inc |

|

DNS |

Domain Name Service |

|

ESP |

Encapsulating Security Payload |

| FCAPS |

Fault, Configuration, Accounting, Performance and Security Management |

|

FTP |

File Transfer Protocol |

|

GoS |

Grade of Service |

|

HDSL |

High bit-rate Digital Subscriber Line |

|

HFC |

Hybrid Fiber-Coax |

|

HTML |

HyperText Markup Language |

|

HTTP |

Hypertext Transfer Protocol |

|

IAP |

Internet Access Provider |

|

ICMP |

Internet Control Message Protocol |

|

IETF |

Internet Engineering Task Force |

|

IKE |

Internet Key Exchange |

|

IN |

Intelligent Network |

|

IntServ |

Integrated Services |

|

IP |

Internet Protocol |

| IPSec |

IP Security Architecture |

|

IS-IS |

Intra-Domain Intermediate System to Intermediate System Routing |

| ISAKMP |

Internet Security Association & Key Management Protocol |

|

ISDN |

Integrated Services Digital Network |

|

ITU-T |

International Telecommunication Union -- Telecommunication standards |

|

ISP |

Internet Service Provider |

|

JMAPI |

Java Management API |

|

JMX |

Java Management Extensions |

|

MANET |

Mobile Ad-hoc Networking |

|

MBR |

Model-Based Reasoning |

|

MIB |

Management Information Base |

|

MPLS |

Multi-Protocol Label Switching |

|

MTBF |

Mean Time Between Failure |

|

MTTR |

Mean Time To Repair |

|

NAT |

Network Address Translation |

|

NFS |

Network File System |

|

NMS |

Network Management Station |

| NN |

Neural Networks |

|

OMAP |

Operations, Maintenance and Administration Part |

|

OMG |

Object Management Group |

|

ORB |

Object Request Broker |

|

OSI |

Open Systems Interconnection |

|

OSPF |

Open Shortest Path First |

| PAT |

Port Address Translation |

|

PBN |

Policy-Based Networking |

|

PCT |

Private Communication Technology |

|

PN |

Public Network |

|

POTS |

Plain Old Telephone Service |

|

PSTN |

Public Switched Telephone Network |

|

QoS |

Quality of Service |

|

QR |

Qualitative Reasoning |

|

RADIUS |

Remote Authentication Dial-In User Service |

|

RBR |

Rule-Based Reasoning |

|

RIP |

Routing Information Protocol |

| RM-ODP |

Basic Reference Model for Open Distributed Processing |

|

RPC |

Remote Procedure Call |

|

RSVP |

Resource ReSerVation Protocol |

|

RTFM |

Real-time Traffic Flow Measurement |

|

S-HTTP |

Secure HTTP |

|

S/MIME |

Secure Multipurpose Internet Mail Extension |

|

SA |

Security Association |

|

SGML |

Standardized Generalized Markup Language |

|

SLA |

Service-Level Agreements |

|

SMFs |

Systems Management Functions |

|

SMI |

Structure of Management Information |

|

SNMP |

Simple Network Management Protocol |

|

SRM |

Scalable Reliable Multicast |

|

SS#7 |

Signalling System #7 |

|

SSH |

Secure Shell |

|

SSL |

Secure Socket Layer |

|

TCP |

Transport Control Protocol |

| TINA |

Telecommunications Information Networking Architecture |

|

TMN |

Telecommunications Management Network |

|

TMN-MS |

TMN Management Service |

|

TMN-SM |

TMN Systems Management |

|

TOM |

Telecommunications Operations Map |

|

UDP |

User Datagram Protocol |

| URL |

Uniform Resource Locator |

|

USM |

User-based Security Model |

| VACM |

View-based Access Control Model |

| VoD |

Video on Demand |

|

VPN |

Virtual Private Network |

|

WAP |

Wireless Application Protocol |

|

WWW |

World Wide Web |

|

XML |

eXtensible Markup Language |

|

XMP |

X/Open Management Protocols API |

|

|

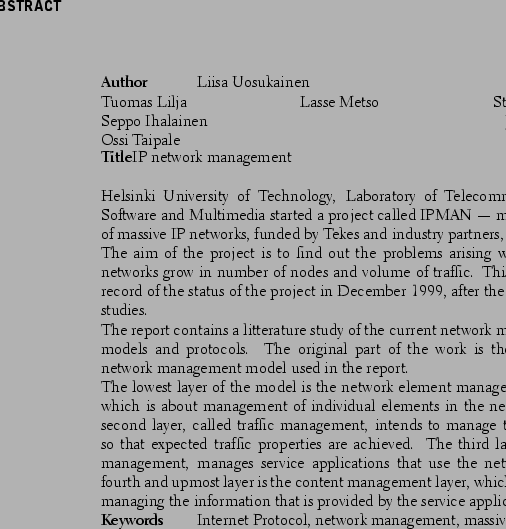

Networks and distributed processing systems have become critical factors in the business world. Companies and organizations develop large and complex networks with an increasing number of applications and users. The need for high capacity IP networks is growing because of the new WWW and multimedia applications, faster data transmission in mobile networks, and IP telephony. Today's routed IP networks suffer from serious problems related to scalability, manageability, reliability and cost.

Helsinki University of Technology has started IPMAN project in 1999. The target of the IPMAN project is to research and develop a network management paradigm for massive IP-networks.

Introduction of new equipment and new technologies means introduction of new information systems, which also increases the number of data repositories and fault management systems. As networks become larger and more complex, tools and applications to ease network management are critical. Automated network management is needed [92].

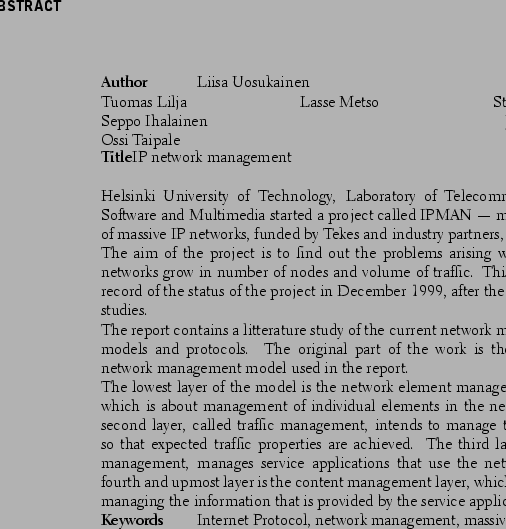

Time is a critical factor in network management (see figure 1.1). Managers strive for shorter cycles and customers demand faster response. Shorter cycles lead into lower costs and greater productivity [29]. Effective use of network facilities can improve a competitive position, create new market opportunities and afford efficient communications between business units and customers.

Figure:

Network management, modified [29]

|

Network management views the computing environment as a collection of co-operating systems connected by various communication mechanisms. Sun Microsystems expresses that the network is the computer. This means that effective system management is thinking the network as a single, multilayer entity, one that requires its own care [82]. An important aspect is that management is useless to a company if it does not solve business problems and ease the work of operators [104].

A recent Gartner Group study "Strategies to Control Distributed Computing's Exploding Costs" reports that while the strategic value of systems and networks continues to increase, the escalating cost of managing that technology is undermining the organization's expected return on its investment [29].

Network management that is effective and adapts to business strategy requires:

- the right abstraction level of information,

- information at the right time, and

- information in an easy-to-use format [29].

Effective network management that adapts to business strategy contains functions, such as technology selection, network automation, capacity planning, predictive problem avoidance and sophisticated trouble-shooting. These functions all require information that goes beyond the data available to most of network management staff.

Experts forecast the changes that will happen in the next years. According to James Herman in the Sixth IFIP/IEEE International Symposium on Integrated Network Management (May 1999): "The main affect of the Internet is to enable the rise of virtual business and services. There will also be large data volumes (more customers x more interactions with customers x more data per interaction = an explosion). PC will no longer be the dominant access device, the network is the center of everything. There will be more need for mobile and wireless infrastructure. Data will find you wherever you are. When there are no connectors, it means lighter, cheaper and simpler devices."

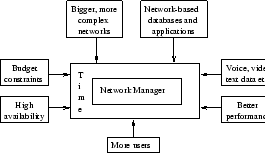

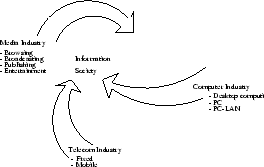

Figure 1.2 expresses the vision of Internet development. Almost every telephone company has become involved in delivering non-telephone services to end users. Plain Old Telephone Service (POTS) is the basic telephone call service. Internet Access Provider's (IAP's) role is to ensure that the end user has a reliable connection to the Internet.

Figure:

The Internet picture [28]

|

Most cable television (CATV) providers are interested in offering telephone and Internet services as well as video-on-demand services. The Hybrid Fiber-Coax (HFC) network is an emerging cable architecture for providing residential video, voice telephony, data, and other interactive services to end users over fiber optic and coaxial cables. The HFC network can provide the bandwidth that some multimedia applications require, using the spectrum from 5 MHz to 450 MHz for conventional downstream analog information, and the spectrum from 450 MHz to 750 MHz for digital broadcast services such as voice and video telephony, video-on-demand, and interactive television.

An alternative to a coaxial or fiber/coaxial network is offered by a

technology that can transmit relatively high-speed data over untwisted

or twisted pair cables for distances up to 4000 m. The technology can

use existing digital telephone subscriber lines. The High bit-rate

Digital Subscriber Line (HDSL) offers bi-directional transmission at

1.5 Mbps with a transmission bandwidth of 200 kHz

. Asymmetric Digital Subscriber Line (ADSL) can transmit

four one-way 1.5 Mbps video signals, in addition to a full-duplex 384

Kbps data signal, a 16 Kbps control signal, and analog telephone service. ADSL has a transmission bandwidth of 1.1 MHz.

Many end users want to access services not only in their homes, but also outside the homes. Wireless transmission enables the desired mobility among users. Because the bandwidth is shared, users are grouped into small cells. The users in each cell communicate with a single base station, and base stations are linked together by a wired network.

Mobile Ad hoc Networking (MANET) is an autonomous system of mobile nodes, where a node is both a host and a router. Mobile nodes communicate via wireless technology, and they are free to move randomly and organize themselves arbitrarily. MANET supports robust and efficient operation in mobile wireless networks by incorporating routing functionality into mobile nodes.

Satellite transmission also facilitates mobility. Because the transmission area covered by a satellite is very large, it is well suited for video and audio broadcasting [125, pages 16-20].

Chapter 2 studies models developed to classify and order network management problems. Chapter 3 describes some protocols used for network management, and chapter 4 covers some possible future trends in network management.

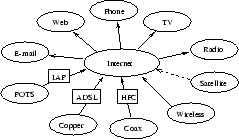

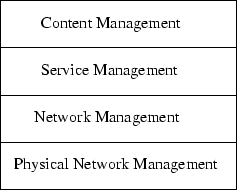

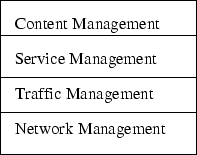

Professor Olli Martikainen suggested use of a reference model, where network management is divided into four levels (see figure 1.3).

Figure 1.3:

Reference model by Professor Olli Martikainen

|

IPMAN project has developed further the reference model, and has modified its structure (see figure 1.4).

Figure 1.4:

Modified reference model

|

Chapters 5 to 8 study each layer of the

modified reference model. Finally, chapter 9 gives a short

summary of commercial network management tools.

The IPMAN project is funded by TEKES, Nokia, and OES. We thank them

for their support. The help of Juha Liukko and Jaakko Akkanen in

proofreading this report is also highly appreciated.

2. Network Management Models

This chapter describes models that are used to structure the problems and ideas in network management. Section 2.1 studies the OSI network management models, and section 2.2 describes the TMN network management model. Management of customer networks is briefly addressed in section 2.3. SMART TMN, described in section 2.4, has a broader scope on network management. Finally, network management for the TINA architecture is studied in section 2.5.

1. OSI Management

The Open Systems

Interconnection (OSI) Management is documented in ITU-T

and CCITT X.700-series Recommendations [40]. It is based on four

components: Management Model, Information Model, Communication

Protocol for Transferring Management Information, and Systems

Management Functions. OSI Management functionality is divided into

five Management Functional Areas according to the OSI

FCAPS model [33].

The transfer of management information in OSI networks is provided by

Common Management Information Protocol (CMIP, see

page ![[*]](cross_ref_motif.png) ) [22].

) [22].

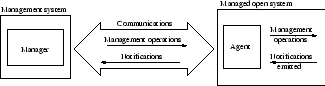

1. Management Model

The Management Model describes a manager-agent concept (figure 2.1). The manager system manages Managed Objects in distributed manner by issuing remote management requests to agent processes. The agents manage the Managed Objects and are responsible for implementing the functionality needed to execute the requests. They may also return values and send notifications (events or traps generated by Managed Objects) back to the manager [118,22,114,126].

Figure:

The manager-agent concept [23]

|

A network to be managed can be divided into management domains. A domain is an administrative partition of a managed network or Internet. Domains may be useful for reasons of scale, security, or administrative autonomy. Domains allow the construction of both strict hierarchical and fully cooperative and distributed network management systems [126,23].

2. Information Model

The information model deals with Managed Objects that are abstractions of the real resources on the network [33]. All information relevant to network management and definitions of the objects to be managed resides in a Management Information Base (MIB). MIB is a "conceptual repository of management information", an abstract view of all the resources to be managed. All information within a system that can be referenced by management protocol is considered to be part of MIB [126].

The logical structure of MIB and the conventions for describing and uniquely identifying MIB information are defined in the Structure of Management Information (SMI). SMI is defined in terms of Abstract Syntax Notation One (ASN.1), that provides a machine-independent representation for the information [126].

Systems Management Functions (SMFs) define common facilities that can be applied to particular Managed Objects corresponding to different resources. The SMFs include mechanisms for controlling access to Managed Objects and the distribution of events, common formats for reporting alarms and status, and mechanisms for invoking and controlling remote test execution [114].

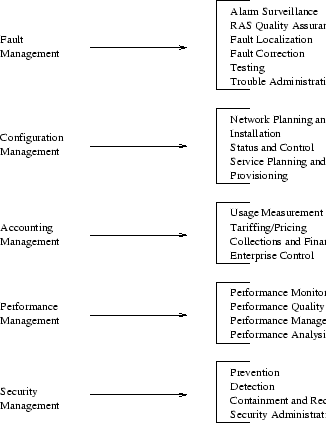

4. Management Functional Areas -- OSI FCAPS model

OSI Management functionality is divided into five management functional areas [22]:

- Fault Management encompasses fault detection, isolation and the correction of abnormal operation. It includes functions to maintain and examine error logs, accept and act upon error notifications, trace and identify faults, carry out diagnostic tests and correct faults.

- Configuration Management identifies and exercises

control over open systems. It also collects data from and provides

data to open systems. The purpose of Configuration management is to

prepare for, initialize, start, provide the continuous operation of,

and terminate interconnection services.

- Accounting Management enables charges to be established for the use of resources in OSI environment, and for costs to be identified for the use of those resources. It includes functions to inform users of costs incurred or resources consumed, to enable accounting limits to be set and tariff schedules to be associated with the use of resources and enable costs to be combined where multiple resources are used.

- Performance Management offers functions to report and evaluate the operation of network and it's elements. Statistical data is collected for the analysis and development of the network.

- Security Management includes functions to create,

delete and control security services and mechanisms, distribute

security-relevant information, and report security-relevant

events.

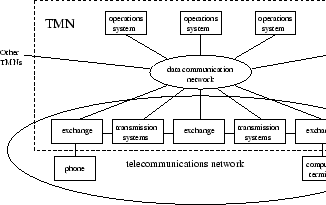

2. Telecommunications Management Network

A Telecommunications Management Network (TMN) provides management functions for telecommunications network and services. It also offers communications between itself and the network and it's services. It is an architecture that provides interconnection between various types of Operations Systems (OSs) and/or telecommunications equipment for the exchange of management information [39].

The top-level standards and recommendations for OSI systems management

form the basis for TMN standards [40]. The TMN standards are

defined in ITU-T M.3000-series documents [116, page 48].

Figure 2.2 shows a general relationship between a TMN and the telecommunications network it manages. TMN is conceptually a separate network that interfaces a telecommunications network at several different points to send/receive information to/from it and to control it's operations. A TMN may use parts of the telecommunications network to provide it's communications [39].

Figure:

General relationship of a TMN to a telecommunications network [116, page 25]

|

The scope of network management is broader in telecommunications than

in data communications. Thus a TMN must provide more than just the

functionality defined in the OSI FCAPS model. TMN Management Services

(TMN-MSs) include

- customer administration,

- network provisioning management,

- workforce management,

- tariff, charging and accounting administration,

- quality of service and network performance administration,

- traffic measurement and analysis administration,

- traffic management,

- routing and digit analysis administration,

- maintenance management,

- security administration, and

- logistics management.

An overview on TMN-MSs is provided in ITU-T Recommendation

M.3200. A more detailed description can be found in ITU-T

Recommendation M.3400.

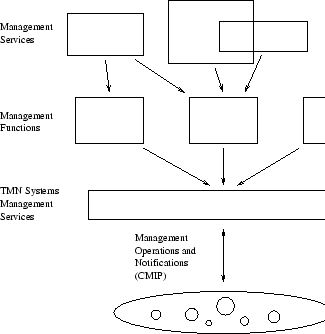

A TMN Management Service is made up of TMN Management Function Set

Groups. They are further subdivided into Management Function Sets and

eventually into Management Functions. A TMN application of any

complexity can be created by combining these elementary building

blocks. The management functions are then mapped to TMN Systems

Management (SM) services. The TMN SM services are provided by

OSI Systems Management Functions. These principles are

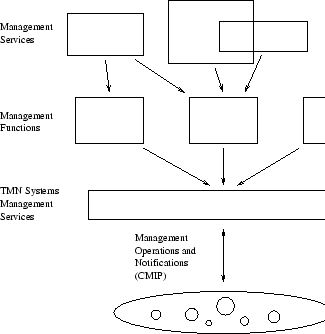

illustrated in

figure 2.3. Figure 2.4 shows

the mapping of OSI Management Functional Areas (MFAs) and TMN

Management Function Set Groups. The Management Function Sets and

individual Functions are defined in ITU-T Recommendation

M.3400 [116, page 48-53].

Figure:

TMN Management Services, Management Functions and Systems Management Services [116, page 50].

|

Figure:

Mapping of OSI MFAs and TMN Management Function Set Groups [116, page 53].

|

2. TMN Management Layers

The needed management functionality is achieved by using five layers of management, described in [116, pages 19-21]. Each layer has it's own functions and interfaces to layers above and below. The lower layers perform more specific functions and upper layers are concerned with functions more general. Each layer must interact with the layer below in order to execute it's task.

- Business management layer is responsible for management at the enterprise level. The layer is concerned with the network planning, agreement with operators, and executive-level activities such as strategic planning.

- Service management layer provides the customer interface. It's functions include service provisioning, opening and closing accounts, resolving customer complaints, fault reporting, and maintaining data on Quality of Service.

- Network management layer manages the whole network. It receives data from network element management layer and provides total-network-level views.

- Network element management layer is responsible for managing a subnetwork of the whole managed network. The interaction with network elements is provided by network element layer.

- Network element layer provides for the agent functions of the managed network elements.

TMN architecture is divided into three aspects, which can be

considered separately when designing a TMN: functional,

information and physical architecture.

- Functional

architecture describes the appropriate division and distribution of

functionality within the TMN to allow the creation of building blocks,

from which a TMN of any complexity can be implemented [39].

- Information architecture describes the nature of the information that needs to be exchanged between the building blocks, and also describes the understandings that each building block must have about the information held in other building blocks [39].

- Physical architecture describes the implementation of function blocks on physical systems and the interfaces between them [116, page 31].

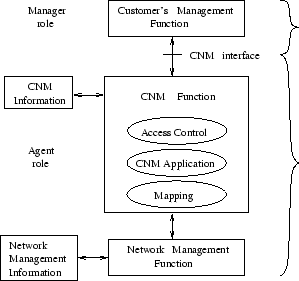

3. Customer Network Management

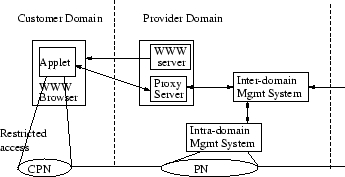

The purpose of Customer Network Management (CNM) is to provide external users of a telecommunications network with a limited control and view of the managed network. It enables customers to manage a portion of the whole network and subscribe it's services. Figure 2.5 illustrates the CNM functional architecture [116, pages 38-42].

Figure:

CNM functional architecture [116, page 39].

|

Customers are provided with a subset of the TMN management services, limited management information, and CNM supporting services. CNM supporting services enable customer's management system to request service provisioning and service usage from a service provider [116, pages 38-44].

4. SMART TMN

Smart TMN [113] is a program of Telemanagement Forum, a non-profit organization of dozens of product vendors and operators. The goal of SMART TMN is to present a larger scale, business process-driven model of telecommunications networks management.

The SMART TMN consists of four elements:

- Telecommunications Operations Map (TOM) which describes key business processes,

- Technology Integration Map, which contains recommendations of technologies to adopt for different management applications [112],

- Central Information Facility for information store for technical specifications, object models etc., and

- Catalyst Projects, which are projects to validate technology concepts.

5. Telecommunications Information Networking Architecture

Telecommunications Information Networking Architecture (TINA) is designed to meet the needs of telecommunications services ranging from traditional voice-based services to interactive multimedia, multi-party services, information services, as well as management services. All these services are considered to be software-based applications that operate on a distributed computing platform [73, page 137]. TINA addresses a wide range of issues and provides a complex set of concepts and principles. In this respect, it is much more a framework, a compilation of concepts and principles for developing future distributed telecommunications and management services, than a specific architecture [73, page 148].

TINA architecture is based on four principles: Object-oriented analysis and design, distribution, decoupling of software components and separation of concern. The purpose of these principles is to ensure interoperability, portability and re-usability of software components, independence from technologies, and to help to create and manage complex systems [115]. The two major separations of concern are the separation between applications and the environment, and the separation of applications into the service specific part and the generic management and control part [115].

Due to the complexity of TINA, it's architecture is divided into four sectors: computing architecture, service architecture, network architecture and management architecture [121, page 24].

- The computing architecture defines a set of concepts

and principles for designing and building distributed

software [121]. It is based on the Basic Reference Model for Open

Distributed Processing (RM-ODP, ITU Recommendation

X.900) [73, page 148].

- The service architecture defines a set of concepts and principles for the design, specification, implementation and management of telecommunication services [121, pages 25-26].

- The network architecture provides generic concepts that describe transport network in general, technology-independent way. The TINA network is a transport network that is capable of transporting information that is heterogeneous in terms of data formats, bandwidth and other quality of service related aspects. The network is capable of handling streams and their point-to-point or multi-point connections [121, page 26].

- The management architecture is based on the OSI management and TMN standards. In particular, the management architecture adopts the TMN functional layers. All the other TINA architectures are influenced by the management architecture principles [73, page 163]. The TINA management architecture is still under study [121, pages 27-28].

TINA network management model extends the OSI

FCAPS model (see

page ![[*]](cross_ref_motif.png) ) [73,25]. Configuration management is

divided into connection management and resource

configuration management.

) [73,25]. Configuration management is

divided into connection management and resource

configuration management.

- Connection management is considered a fundamental activity in telecommunications network [121]. TINA represents a new approach to the traditional way of connection control [73, page 163]:

Connection control includes the establishment, modification and release of connections. Traditionally these are considered as control operations, which are viewed as being different from management. In TINA, these operations are seen as dynamic management operations. Connection management is used by service architecture components whenever a service requires connections.

- Resource configuration management contains installation support, provisioning of network resources to make them available for use, monitoring and control of resource status. It also includes management of the relationships among the resources.

3. Network Management Protocols

This chapter describes different existing standard protocols that are

used in network management. SNMP (section 3.1) is widely

used in IP networks, and is also basis for some other protocols.

CMIP, described in section 3.2 is an OSI based protocol.

Network management protocols used in SS#7 are described in

section 3.3, and network management for the ATM in

section 3.4.

1. Simple Network Management Protocol

Simple Network Management Protocol (SNMP) is the most widely used management protocol in TCP/IP networks. This is due to it's simplicity, expandability, easy implementation and the fact that it poses only little stress on the managed network and the managed nodes [118,21].

SNMP is based on a agent-manager concept, similar to the one illustrated in figure 2.1: A manager sends requests to agents in network elements. The agents control Managed Objects (see page ![[*]](cross_ref_motif.png) ) accordingly, send responses and issue trap messages to the manager. The objects to be managed are defined in a MIB (see page

) accordingly, send responses and issue trap messages to the manager. The objects to be managed are defined in a MIB (see page ![[*]](cross_ref_motif.png) ). The requests and responses are exchanged using the User Datagram Protocol (UDP), which is a connectionless protocol. Trap-directed polling is used to decrease the management traffic on the network [64].

). The requests and responses are exchanged using the User Datagram Protocol (UDP), which is a connectionless protocol. Trap-directed polling is used to decrease the management traffic on the network [64].

The management functionality is centralized in a Network Management Station (NMS), which acts the manager role. Agents are kept simple, and thus SNMP is particularly conservative in the memory and computational requirements placed on devices connected to the network [116].

The first version of SNMP, SNMPv1, became both an IETF (the Internet Engineering Task Force) and a de facto standard. This was due to it's widespread market acceptance. However, due to the lack of adequate security features, a new version of SNMP had to be developed. Various proposals on SNMPv2 were made, but none were adopted as a new standard. SNMPv2 failed because it had lost the simplicity of SNMPv1 [118,26].

Third version of SNMP, SNMPv3, is now in it's final stages of standardization. It builds on the first and the second versions of SNMP, and is intended to offer new capabilities for open, interoperable, and secure network management. It includes methods for security (authentication, encryption, privacy, authorization, and access control), and a new administrative framework (naming of entities, user names and key management, notification destinations, and proxy relationships, remotely configurable via SNMP operations) [26, pages 501-503].

The SNMP architecture makes a distinction between message security services (integrity, authentication and encryption) and access control services. Both message security and access control services can be provided by multiple security or access control models. The architecture allows coexistence of multiple models in order to allow future updates, in case the security requirements change or cryptographic protocols need to be replaced [95, pages 690-692].

The User-based Security

Model (USM) provides integrity, authentication and privacy, and is the

standard security model currently used with SNMP version 3

(SNMPv3). The View-based Access Control Model (VACM)

provides checking

whether the users have proper access rights to access one or more

objects in a Management Information Base (MIB) and perform

operations on these objects. USM is discussed in [17] and

VACM in [128].

2. Common Management Information Protocol

Common Management Information Protocol (CMIP) is a much

more complicated and extensive network management protocol than

SNMP. It improves on many of SNMP's weaknesses, the security issues

for instance, thus providing a more efficient network management

environment. CMIP can also be used to perform tasks that would be

impossible under SNMP [118].

In CMIP, requests and responses between managers and agents (see

figure 2.1) are exchanged using the OSI

connection-oriented transport protocol that provides in-order,

guaranteed delivery [64].

CMIP also has some disadvantages: Due to it's complicity CMIP poses a lot of stress on the network and it's implementation is very difficult [118].

3. Signalling System #7

Signalling System #7 (SS#7) Operations,

Maintenance and Administration Part (OMAP) offers a framework for

operation and maintenance in SS#7 networks. OMAP uses principles of

management defined in TMN Recommendations (ITU-T M.3010 or ETSI ETR

037, see reference [39]) and in OSI Management

Recommendations of the ITU-T X.700-Series. Overview of OMAP and

Signalling System #7 management is provided in ITU-T Recommendation

Q.750 [56].

The definition of TMN is concerned with five layers in management (see page ![[*]](cross_ref_motif.png) ), namely business management, service management, network management, network element management, and elements in the network that are managed. Of these, OMAP provides the three lowest layers. It is not concerned with business management, and interacts with other TMN parts to provide service management [56].

), namely business management, service management, network management, network element management, and elements in the network that are managed. Of these, OMAP provides the three lowest layers. It is not concerned with business management, and interacts with other TMN parts to provide service management [56].

Management functions and resources provided by OMAP allow management within the SS#7 signalling points. Three categories of management functionality (fault, configuration and performance management) of the five in OSI FCAPS model (see page ![[*]](cross_ref_motif.png) ) are provided [56].

) are provided [56].

4. ATM Network Management

ATM Forum has standardised Broadband ISDN (B-ISDN), and

defined also an ATM network management model. This model is based on

TMN, and uses the lower three layers of the reference architecture of

ITU-T M.3010 (Network Management, Element Management, and Element

Layer) [78]. The interfaces between layers are specified as

function points, and leave physical implementation unspecified. ITU-T

has also used TMN as a basis in its ATM network management

standardisation [96].

ATM Forum specifications define five managment intefaces: M1

between private network manager and end user,

M2 between private network manager and

private network, M3 between private network

manager and public network manager, M4 between

public network manager and public network, and

M5 between two public network managers.

In addition to M1-M5 network management, ATM-Forum provides a protocol called Interim Local Managment Interface (ILMI), which is a SNMP-based protocol [7].

4. Future network management

New technologies, management models, and visions of future network management, that are currently under development, are described in this chapter. Section 4.1 presents Open Group's X/Open Systems Management Reference Model. Web-based network management is discussed in section 4.2, and Java Management Extensions in section 4.3. Section 4.4 presents CORBA-based management, and section 4.6 SPIN's vision on network management. Finally, section 4.7 discusses Policy-based network management.

1. X/Open Systems Management Reference Model

Open Group, a vendor-neutral international consortium for buyers and suppliers, has presented an X/Open Systems Management Reference Model. It's goals are [114]:

- to identify the crucial aspects of the distributed systems management problem space, especially those that are unique to this topic,

- to establish common terminology, and

- to establish a problem-oriented approach to the realization of distributed systems management solutions.

The reference model describes concepts necessary to build a comprehensive distributed systems management environment. It identifies the mapping between the abstract concepts and some technologies that provide suitable implementation bases for the realization of the model. The model is intended to enable a network of heterogeneous systems to be managed as a single system [114].

The X/Open Systems Management Reference Model uses object-oriented

techniques in the specification of systems management. These

techniques are derived from those used in the OSI

Management Model, as well as the Object Management Group Common Object

Request Broker Architecture (CORBA).

The Reference Model consists of three basic components:

- Managers, which implement Management Tasks and other composite management functions,

- Managed Objects, which encapsulate the resources, and

- Services, which provide the X/Open Systems Management Support Environment.

It is anticipated that the primary vehicle for implementation of the Reference Model will be the Object Management Group's Object Request Broker (ORB) technology.

Another significant implementation technology is that embodied by the

ISO/CCITT and Internet management protocols, CMIP and SNMP. The X/Open

Management Protocols API (XMP) provides a

uniform access method to these technologies [114].

In addition to the above, which represent the anticipated future development of distributed systems management, the Reference Model can also be implemented using currently available technologies. These include those based on existing Remote Procedure Call (RPC) technologies, such as ONC NIS and DCE RPC [114].

2. Web-based Network Management

Doing network management

operations using Internet/intranet technologies is called Web-based

network management. Web-based network management comprises controlling

network systems and/or data gathering, delivery of network

management tasks and data analysis.

Basic applications of web-based network management are web-based

configuration and management of individual devices, advanced network

wide management capabilities and web reporting of network status

information.

For network element configuration with a web browser, a management

agent with a web (HTML) interface must be used. The management agent may

configure the element using web forms and give reports as web pages.

Advanced network wide management capabilities seem to offer a web interface for traditional network management tools. Web reporting of network status means reporting statistics and query information of network elements on Intranet pages.

A pro for web-based network management is the ability to use cheap

hardware and software for user interfaces; the personnel may move

physically and use the web interface for network management. However,

it seems that web-based network management mainly is a user interface

improvement and does not add significantly to the actual network

management.

3. Java Management Extensions

Java Management API (JMAPI) [107] was intended to

provide a standard interface between different computers and network

devices. The system can be used by Java programmers, and was created

in alliance with Sun Microsystems, Bullsoft, Computer Associates,

Exide Electronics, Jyra, Lumos Technologies, TIBCO, and Tivoli.

Specification version 2.0 was intended for publication in March 1999.

Instead of version 2.0 of JMAPI, the product was named Java Management

Extensions [108], and released in August, 1999.

4. CORBA-based Telecommunication Network Management System

The Object Management Group (OMG) is a non-profit

international trade association. OMG has presented an outline of an

architecture for a CORBA-based Telecommunications Network Management

System. One of the objectives of this architecture is to ensure a

complete compatibility with proprietary, ITU-T/ISO, SNMP and

CORBA -based network elements [87].

CORBA (Common Object Request Broker Architecture)

is an architecture that supports the distribution of management

functionality and managed objects [114].

In the context of TMN, CORBA is seen to offer potential in two

significant areas: in the description and implementation of management

interfaces supported by network devices, and in the description and

implementation of interfaces within and between management operations

systems [87].

Common Information Model (CIM) is a common data model

developed by Distributed Management Task Force, Inc. It is

implementation-neutral and can be used to describe management

information in a network/enterprise environment. The model is intended

to enable interchange of management information between management

systems and applications, thus providing for distributed network

management [35,34]. See section 8.2 for a more

detailed discussion about CIM.

CIM is currently supported by at least Microsoft (Windows NT/98), Hewlett-Packard (HP OpenView), and IBM (Tivoli)![[*]](foot_motif.png) .

.

6. SPIN's Intelligent Network Management

SPIN is a research project in the Institute for Information Technology at Canada's National Research Council [1]. The SPIN Intelligent Network Management project studies and develops new agent-based technologies for controlling, planning and problem definition of heterogeneous networks. The application development of SPIN networks uses integration of the off-the-self NM components, and testing and usage of popular tools, such as HP Openview.

7. Policy-Based Networking

A policy is a combination of rules and services where

rules define the criteria of resource access and usage. Policies can

contain other policies, they allow to build complex policies from a

set of simpler policies, so they are easier to manage. They also

enable to reuse previously built policy blocks [106].

Policy groups and rules can be classified by their purpose to [80]:

- service policies,

- usage policies,

- security policies,

- motivational policies,

- configuration policies,

- installation policies, and

- error and event policies.

Service policies describe services available in the network. These

services will be available for usage policies. For example, QoS

service classes (Voice-Transport, Video-Transport, ...) are made by

using service policies.

Usage policies describe how to allocate the services defined by

service policies. Usage policies control the selection and

configuration of entities based on specific usage data. For example,

usage policies can modify or re-apply Configuration Policies.

Security policies identify clients, permit or deny access to

resources, select and apply appropriate authentication mechanisms, and

perform accounting and audit of resources.

Motivational policies describe how a policy's goal is

accomplished. For example the scheduling of file backup based on

activity of writing onto disk is a kind of motivational policies.

Configuration policies define the default setup of a managed entity,

for example the setup of the network forwarding service or the

network-hosted print queue.

Installation policies define what can be put on the system, as well as

the configuration of the mechanisms that perform the

installation. Typical installation policies are administrative

permissions, and they can also describe dependencies between different

components.

Error and event policies, for example, ask the user to call the system

administrator, if a device fails between 8am and 5pm. Otherwise, error

and event policies ask the user to call the Help-Desk [80].

Policy-Based Networking (PBN) is gaining a wider

acceptance in the IP management, because it makes more unified control

and management possible in complex IP networks [15].

5. Network Element Management

This chapter discusses network element management. Section 5.1 describes network configuration management. Section 5.2 describes security management, including protocols and programs developed to provide security over the Internet, and network elements used to secure risky areas of networks. Section 5.3 studies fault management, including troubleshooting, fault localization, and testing methods.

1. Configuration Management

Configuration management means

initializing and shutting down parts of a network (for example

routers, hubs, and repeaters) and reporting the changes. It is also

concerned with maintaining, adding and updating the relationships

among elements and the status of elements during network

operation. Network traffic patterns and identified bottlenecks that

reduce performance must be understood. Nowadays modern elements and

subsystems can be configured to support many different

applications. The same device can be configured to act either as a

router or as an end system node or both. Depending on the

configuration, the appropriate software and a set of attributes and

values are chosen for the device [101, page 481]. Reconfiguration

may be necessary in case of fault isolation or when the network is

expanded.

As the network scales up in physical size (capability and complexity),

also the management capabilities must be enlarged. The aim is that

these actions could be automated. It should also be possible to make

on-line changes without affecting the entire element or

network [92]. Large-scale network management systems must be

constructed to support diverse network elements. They must also be

extensible and flexible enough to support new elements and the rapid

deployment of new highly customized services.

Activities in network management can be divided into three groups [47]:

- activities that do not affect the functioning of an element,

- activities that affect the functioning of an element (for example switching off an element in a subnetwork), and

- activities that make an element to do a desired function (for example restarting an element).

Dynamic updating of configuration needs to be done periodically to ensure that the existing configuration is known. This is essential for fault management as well.

Configuration management tools have reporting components. When network configuration changes, users must be informed about new network elements and resources. Configuration management is well organized when all the gathered information and operations are in statistical form.

There is a risk of spending money on hardware and services that remain underutilized. On the other hand the underprovisioning usually lowers productivity, which reflects to the service level [16]. When the network is designed, it is essential to predict the growth of the network. The network should also be prepared to varying volumes of users.

By continuously addressing the cost of maintenance (MTBF![[*]](foot_motif.png) and MTTR

and MTTR![[*]](foot_motif.png) statistics, costs associated with maintaining) the network as a system can be tuned [104].

statistics, costs associated with maintaining) the network as a system can be tuned [104].

TCP/IP networks cause more work for system administrators than other networking systems. Administrators have to manually configure each computer for network use when it is added to the network, or when it is moved from one subnet to another. Each computer must manually be assigned a unique IP address and various configuration parameters must be set.

There is a need for tools that automatically assign addresses and set

configuration parameters. Some client/server solutions are already

available. Client hosts find the details of other hosts on the

network using the Domain Name Service (DNS) protocols, and can be

told their network configuration using Bootstrap (BOOTP)

protocol. Dynamic allocation of IP addresses to particular hosts

can be chosen over static allocation using the Dynamic Host

Configuration Protocol (DHCP) [37].

2. Security Management

Security management covers such areas as detecting, tracking and reporting security violations, and creating, deleting and maintaining security-related services such as encryption, key management, and access control. Distributing passwords and secret keys to bring up systems are also functions of security management [116, page 12].

As computer-based communications and networks that link open systems continue to expand, security management becomes critical. Nevertheless, standardization of security properties has developed slowly. Network management must provide proactive management of security and integrate it with protocols, such as IPSec and services, like VPNs. Security of devices and networks must be compared to possible threats and risks. If the risks are high, the devices and the networks must be provided with more reliable secure properties.

Table 5.1 describes protocols and programs,

that are developed to provide security over the Internet. Secure-HTTP

(S-HTTP) is an application-level protocol that provides security

services across the Internet. It provides confidentiality,

authenticity, integrity, and non-repudiability. S-HTTP is limited to

the specific software that is implementing it, and it encrypts each

message individually [5].

Table 5.1:

Protocols and programs developed to provide security over the Internet

|

Level |

Protection Used |

|---|

|

Application-level |

S-HTTP, SSH, stelnet, S/MIME |

|

Transport-level (TCP, UDP) |

SSL, PCT |

|

Network-level (IP) |

IPSec |

|

Secure Shell (SSH) and Secure telnet (stelnet) are programs that allow you to log in to remote systems and using an encrypted connection. SSH uses public-key cryptography to encrypt communications between two hosts, as well as for user authentication.

Secure Multipurpose Internet Mail Extension (S/MIME) is an encryption standard used to encrypt electronic mail, or other types of messages on the Internet. It is an open standard developed by RSA Data Security Inc.

Secure Socket Layer (SSL) is an encryption method developed by Netscape to provide security over the Internet. SSL is a protocol layer that is located between the network layer and applications, so that, in theory, it can be used with any application. However, it is vulnerable to poor application design. It provides data encryption, server authentication, message integrity, and optional client authentication for a TCP/IP connection [5].

The Private Communication Technology (PCT) protocol is developed by the Microsoft Company to be used mainly in their Internet Explorer browser.

IP Security Architecture (IPSec) provides a standard

security mechanism and services to the currently used IP version 4

(IPv4) and to the new IP version 6 (IPv6). IPSec is less dependent of

individual applications than SSL. It provides IP-level encryption by

specifying two standard headers: IP Authentication Header (AH)

and IP Encapsulating

Security Payload (ESP). IP Authentication Header provides strong integrity and

authentication. It computes a cryptographic authentication function

over the IP datagram and uses a secret authentication key in the

computation. IP Encapsulating Security Payload provides integrity and

confidentiality for IP datagrams. It encrypts the data to be protected

and places it in the data portion of the IP Encapsulating Security

Payload. However, these mechanisms do not provide security against

traffic analysis. Any specific protocol for key management is not

provided by the architecture, only requirements for such systems to be

used in conjunction are described [91].

IPSec requires a key management

protocol. IETF has standardized Internet Security Association & Key

Management Protocol (ISAKMP) and Internet Key Exchange (IKE) for this

purpose. ISAKMP defines the procedures for authenticating a

communicating peer, creation and management of Security Associations,

key generation techniques and threat mitigation (e.g. denial of

service and replay attacks). These are necessary to establish and

maintain secure communications in an Internet environment. Security

Association (SA) is a security-protocol-specifistic set of parameters

that defines the services and mechanisms necessary to protect traffic

at that security protocol location.

ISAKMP separates the details of security association management and

key management from the details of key exchange. It provides a

framework for Internet key management, but it does not define session

keys by itself. IKE is a protocol that defines key exchange functions

for ISAKMP.

Protocols and programs discussed in this subsection are further studied in [5]. IPSec is defined in [61], ISAKMP in [76] and IKE in [45].

2. Access Control Tools

Hubs, routers and firewalls can be used to

limit access to networks or to parts of the networks. These security

level operations can be restricted to a date or to a time period.

- Hubs used in LANs are provided with simple security properties. Hubs protect sensitive data on the network by checking destination addresses on each packet and sending readable packets only to authorized nodes. Hubs automatically detect and/or disable unauthorized log-on attempts and record the events at the management station. Hubs also track changes involving users and devices on the network, giving the manager a complete record. These security level operations can be restricted to a date or a time period.

- Routers are provided with security properties as well. A router handles packets up through the IP layer. The router forwards each packet based on the packet's destination address, and the route to that destination is indicated in the routing table [49]. Routers can improve network security, but also introduce new problems: Routing protocols are susceptible to security attacks, and routing mistakes may allow the entrance of unauthorized personnel into the network. Unlicensed remote and local operations of routers must be prevented by using usernames.

Traffic can be controlled with packet filtration based on the access

control lists. On the access control lists it is defined what

addresses and protocols to each interface can be routed. Properties

such as NAT![[*]](foot_motif.png) ,

PAT

,

PAT![[*]](foot_motif.png) ,

and logging of events and alarms can be attached to the

router. However, it has not been possible to expand the capacity of

traditional routers as cost-effectively as the capacity of PC

workstations or the network traffic volume [51].

,

and logging of events and alarms can be attached to the

router. However, it has not been possible to expand the capacity of

traditional routers as cost-effectively as the capacity of PC

workstations or the network traffic volume [51].

- Firewall systems control connections between closed networks and outside world. There are two major approaches used to build firewalls: packet filtering and proxy services. Packet filtering systems route packets between internal and external hosts, but they do it selectively. They allow or block certain types of packets in a way that reflects a site's own security policy. The type of router used in a packet filtering firewall is known as a screening router.

Proxy services are specialized application or server programs that run on a firewall host: either a dual-homed host with an interface on the internal network and one on the external network, or some other bastion host that has access to the Internet and is accessible from the internal machines. These programs take users' requests for Internet services (such as FTP and Telnet) and forward them, as appropriate according to the site's security policy, to the actual services. The proxies provide replacement connections and act as gateways to the services. For this reason, proxies are sometimes known as application-level gateways [24].

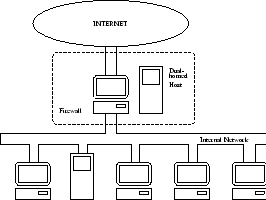

There are three ways to put various firewall components together:

A dual-homed host architecture is built around the dual-homed host computer, a computer that has at least two network interfaces. Such a host could act as a router between the networks these interfaces are attached to; it is capable of routing IP packets from one network to another. Systems inside the firewall can communicate with the dual-homed host, and systems outside the firewall (on the Internet) can communicate with the dual-homed host, but these systems can not communicate directly with each other. IP traffic between them is completely blocked.

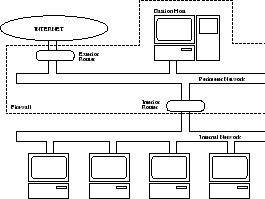

Figure 5.1 shows the network architecture for a dual-homed host firewall. The dual homed host sits between, and is connected to, the Internet and the internal network [24].

Figure 5.1:

Dual-homed host architecture

|

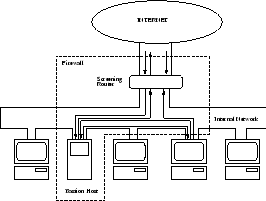

- Whereas a dual-homed host

architecture provides services from a host that is attached to

multiple networks (but has routing turned off), a screened host

architecture provides services from a host that is attached only to

the internal network, using a separate router. In this architecture,

the primary security is provided by packet filtering. For example,

packet filtering is what prevents people from going around proxy

servers to make direct connections. Figure 5.2 shows the

network architecture for the screened host architecture [24].

Figure 5.2:

Screened host architecture

|

The screened subnet

architecture adds an extra layer of security to the screened host

architecture by adding a perimeter network that further isolates the

internal network from the Internet. There are two screening routers,

each connected to the perimeter net. The perimeter network is another

layer of security, an additional network between the external network

and the protected internal network. The perimeter net offers an

additional layer of protection between attackers and the internal

system. One screening router sits between the perimeter net and the

internal network, and the other sits between the perimeter net and the

external network (usually the Internet). To break into the internal

network with this type of architecture, an attacker would have to get

past both routers. Figure 5.3 shows the network architecture

for the screened subnet architecture [24].

Figure 5.3:

Screened subnet architecture

|

3. Fault Management

Physical network problems account more than half of all network

problems. Locating the origins of such problems as a fiber cut, an

incorrect earthing, broken or incorrectly connected adapters, costs

network providers time and money. Network management systems have also

traditionally focused on the logical connection between the end user

and the network destination, since many network problems have been a

result of errors created by software applications [92]. Finding

and repairing failures of software applications is usually difficult,

for example in such a case that the workstation sends but does not

receive packets, too many collisions occur or frames are too short or

too long.

Fault management means troubleshooting, fault localization, isolation and correction. Today, the process of fault management needs to be automated. Rapid and accurate correction of network problems has to be created by the network or by the end user. Even if the entire network was violated, the network management application should work.

Expert systems provide an efficient and cost effective way of automating network fault management. By automating fault management, problems and possible diagnosis can be done faster and more efficiently. However, real-time performance represents a problem for expert systems [41]. Bounds on response times are difficult to establish. There are also risks pushing the limits of automation through the introduction of new or only partially proven technologies.

There are two mechanisms of transferring network management information from a managed entity to a manager: polling and sending alarms (messages are initiated by managed network elements). Advantages and disadvantages exist within both methods. Most network management systems use an optimal combination of alarms and polling in order to maintain advantages of each and eliminate disadvantages of pure polling [103].

Most systems poll the managed objects, search for error conditions and

illustrate the problem in graphical format or as a textual

message. Disadvantages of polling are the response time of problem

detection and the increased volume of network management

traffic. Having to poll many management information base (MIB)

variables per element on a large number of elements is

itself a problem. The ability to monitor such a system is

limited. Polling many objects on many elements increases the amount of

network management traffic flowing across the network. It is possible

to minimize this through the use of hierarchies (polling an element

for a general status of all the elements it polls). Anyway, the

response time will be a problem [103].

If a system fails shortly after being polled, there may be a significant delay before it is polled again. During this time, the manager must assume that a failing system is acceptable. While improving the mean time to detect failures, it might not greatly improve the time to correct the failure. The problem will generally not be repaired until it is detected.

There are problems attached also to the second method, sending alarms: There is a possibility to lose of critical information and to over-inform the manager. An ideal management system would generate alarms to notify its management station of error conditions. However, alarms cannot usually be delivered when the managed entity fails or the network experiences problems. It is important to remember that failing elements and networks can not be trusted to inform a manager that they are failing. The manager should periodically poll to ensure connectivity to remote stations, and to get copies of alarms that were not delivered by the network [103].

Alarms in a failing system can be generated so rapidly that they impact functioning resources. An "open loop" system in which the flow of alarms to a manager is fully asynchronous can result in an excess of alarms being delivered. There may be a situation where all available network bandwidth into the manager is saturated with incoming alarms, thus preventing the manager from disabling the mechanism generating the alarms. Methods are needed to limit the volume of alarm transmission and to assist in delivering a minimum amount of information to a manager. Alarm correlation is done by filtering secondary alarms, e.g. using expert systems![[*]](foot_motif.png) .

.

Many management tools also log events with different formats and different sources. These events should later be correlated using time stamp to identify the source of the problem. Also topology information is needed to identify the precise location of the problem.

Many devices have buffers reserved for logs. When a buffer becomes full, new logs are written over the oldest ones. If the buffer becomes full quickly, some important logs may disappear before they are noticed. Buffers also consume disk space that could be used for other purposes.

Several diagnostic tools are used for troubleshooting and fault localization purposes. These include ifconfig, arp, netstat, ping, nslookup, dig, ripquery, traceroute, and etherfind [49, pages 260-262].

The ping

(Packet InterNet Groper) program is used with TCP/IP internets to test

reachability of destination hosts by sending an ICMP (Internet Control

Message Protocol) echo request and waiting for a reply. Ping can only be

used to determine whether the target IP address is available or not. Since

echo request and response to the request may use different routes, the

violated route cannot be determined. Another usage of ping is to measure

the response times of different packet sizes [16, page 33].

Unlike ping, traceroute forces every

router to send back an ICMP-control message. Most traceroute

applications send a sequence of User Datagram Protocol (UDP)-packets

to a randomly selected UDP-port [9, page 202]. Sometimes the

firewalls filter these packets from the main traffic, in which case

the tracing ends.

After detecting a fault, operators should as soon as possible find the

root cause of the problem. Clearly defining the problem helps to

isolate the root cause from symptoms [84]. Operators must also

determine what troubles it causes and to whom.

At first it is useful to find the parts of the network, where the

fault can not be, by eliminating processes. These parts can be

neglected. If the problem is very serious and difficult, the network

can be divided into smaller parts to be inspected one by

one. Connectivity tests are also used to find the violated devices. At

the same time it can be detected whether the fault is local or

widespread. It can be found out by testing data integrity if some of

the packets are lost in transmission. Delays are also to be tested,

because some faults result from excessive delays [9, page 198].

Measuring the accessibility of devices helps us to collect information about the status of the network. A few devices are chosen usually from the network, and the functionality of the connections is measured from them to other devices. Measurements are done by a control device. After measurements, the results are interpreted.

Measuring the accessibility is not a perfect method, for example, in the case where only routers are controlled. If the router has been set up so that it gives preference to packet switching, it will send packets normally even if it is heavily loaded and perhaps can not answer to messages of the controlling device. Another example is that two devices that can be reached might not have a connection with each other.

Another method of testing is to control routing tables. Routing should change only when the topology of the network changes. If there are changes at other times, there is probably

something wrong with the network [9, pages 183-189].

Routing tables express the topology of the network. Finding out the network topology from routing tables is more difficult than controlling accessibility. In order to get information from routing tables of routers, routers must be Simple Network Management Protocol (SNMP) compatible, dynamic routing tables must support sending request or routing information must be tapped from a routing protocol. These requirements are not necessarily realized although SNMP is a commonly used protocol. Analysis of the routing tables can concentrate on the parts of the network which are most susceptible to failures.

6. Traffic Management

This chapter discusses data communications in IP networks at OSI network layer and link layer levels. Section 6.1 describes different types of IP network traffic, introducing terms Quality of Service and Grade of Service. In section 6.2, the development of a new service model for IP networks is discussed. Section 6.3 discusses performance management and performance related issues.

1. Communications in IP Networks

Data communications in IP networks can be divided into

connectionless and connection-oriented

communications. Communications based on the Internet Protocol (IP) is

connectionless by nature. This means that no end-to-end connection is

established before data is transmitted by the protocol [49, page

13]. In IP networks, Quality of Service (QoS) is defined

in terms of parameters such as bandwidth, delay, delay variation

(jitter), and packet loss

probability [14].

Connection-oriented communications in IP networks is enabled by

protocols built upon the connectionless IP. This means that a logical

connection between communicating network nodes is established before

transmission [49, page 20]. Grade of Service

(GoS)![[*]](foot_motif.png) is defined

in terms of connection blocking probability (i.e. the probability of

failing to establish a connection). Connection blocking can be

controlled using Connection Admission Control (see

page

is defined

in terms of connection blocking probability (i.e. the probability of

failing to establish a connection). Connection blocking can be

controlled using Connection Admission Control (see

page ![[*]](cross_ref_motif.png) ).

).

Traffic in IP networks is composed of individual transactions and flows. A flow is a sequence of packets belonging to an instance of application running between hosts. Flows can be divided into two categories: stream flows and elastic flows.

- Stream flows are generated by real-time audio and video applications such as Internet phone and video conferencing. These applications may require a minimum level of QoS in order to function properly, and thus would benefit from guaranteed QoS.

- Elastic flows are generated by non-real-time applications (e.g. e-mail and FTP). They don't state critical demands on QoS, but adjust their rates to make full use of QoS available [89].

2. New Service Model for IP Networks

The current IP architecture does not support any QoS guarantees because routing is traditionally based on a best-effort principle. This means that each packet of information is treated independently and processed in the order of arrival [14].

The diversity of applications and their requirements raises a need to

develop a new service model for IP networks. The purpose is to

satisfy the requirements of rigid real-time applications while

avoiding costs of over-provisioning![[*]](foot_motif.png) . This

could be done by introducing a service model with several classes of

QoS instead of a single class of best-effort service.

. This

could be done by introducing a service model with several classes of

QoS instead of a single class of best-effort service.

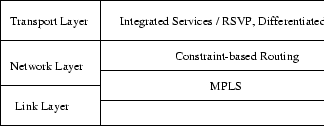

The Internet Engineering Task Force (IETF) has three working groups

developing the new service model or network architecture. These

working groups are described and compared in following

subsections. Also, Traffic Engineering/Constraint-based Routing

approach is discussed. The relationship between OSI layers and

concepts described in this section are illustrated in

figure 6.1.

Figure:

The relationship between OSI layers and concepts described in section 6.2 [131].

|

IETF Integrated Services (IntServ) working

group![[*]](foot_motif.png) is currently defining an enhanced service model which involves

creating two service classes in addition to best-effort service:

Guaranteed service for applications requiring fixed delay

bound, and controlled-load service for applications that

require reliable and enhanced best-effort service. The model is based

on resource reservation initiated by applications. This could be done

e.g. using Resource Reservation

Protocol

is currently defining an enhanced service model which involves

creating two service classes in addition to best-effort service:

Guaranteed service for applications requiring fixed delay

bound, and controlled-load service for applications that

require reliable and enhanced best-effort service. The model is based

on resource reservation initiated by applications. This could be done

e.g. using Resource Reservation

Protocol![[*]](foot_motif.png) [54,131].

[54,131].

Resource Reservation Protocol (RSVP) is used to signal routers in the network to reserve resources and set up a path for a flow [48,131]. If RSVP is used in the network, there should be a mechanism to manage resource reservation policies of applications that initiate the reservation. RSVP and resource reservation are further discussed in [48, ch. 13] and in [133]. Standardization of RSVP can be found in [19].

To provide QoS allocated by a reservation protocol,

Connection Admission Control (CAC) should be used in

routers. CAC functions by blocking incoming stream flows![[*]](foot_motif.png) if the

increase in traffic would drop QoS below an acceptable level for that

or any previously accepted flow. On the other hand, CAC affects also

on GoS, as the level of GoS is decreased when connections are

refused [75, page 33].

if the

increase in traffic would drop QoS below an acceptable level for that

or any previously accepted flow. On the other hand, CAC affects also

on GoS, as the level of GoS is decreased when connections are

refused [75, page 33].

Besides the benefits, CAC bears one disadvantage: Implementation of

CAC would increase the complexity of networks, already complex

enough. Discussion on the benefits and disadvantages of CAC can be

found in [97].

According to Xiao and Ni [131], the Integrated Services

architecture has several problems: It doesn't scale well, states high

requirements on routers, and requires ubiquitous deployment for

guaranteed service. According to Baumgartner et

al. [11], the architecture is suitable only for small networks

(e.g. corporate networks or Virtual Private Networks (VPNs)), not for

Internet backbone networks.

The approach of IETF Differentiated Services (DiffServ) working group![[*]](foot_motif.png) involves creating distinct Classes of Service (CoS), each with reserved resources. The basic difference between IntServ and DiffServ architectures is that while IntServ provides an absolute level of QoS, DiffServ is a relative-priority scheme

involves creating distinct Classes of Service (CoS), each with reserved resources. The basic difference between IntServ and DiffServ architectures is that while IntServ provides an absolute level of QoS, DiffServ is a relative-priority scheme![[*]](foot_motif.png) . Secondly, in DiffServ the QoS in each CoS is defined by an agreement between customer and service provider. This eliminates the need of each application to signal their QoS needs at run time. It also provides better scalability as there is no need to maintain per flow state information in routers [53,131,4].

. Secondly, in DiffServ the QoS in each CoS is defined by an agreement between customer and service provider. This eliminates the need of each application to signal their QoS needs at run time. It also provides better scalability as there is no need to maintain per flow state information in routers [53,131,4].

In Differentiated Services, each packet of information is classified by marking the DS field![[*]](foot_motif.png) in IP datagram. Packets receive their forwarding treatment, or per-hop behavior, according to the classification. The use of the DS field is standardized in [86] and in [13]. A small number of per-hop behaviors will also be defined by the working group [53,131].

in IP datagram. Packets receive their forwarding treatment, or per-hop behavior, according to the classification. The use of the DS field is standardized in [86] and in [13]. A small number of per-hop behaviors will also be defined by the working group [53,131].

According to Xiao and Ni [131], DiffServ is more scalable,

requires less from routers, and is easier to deploy than IntServ

architecture. Baumgartner et al. [11] discuss more

widely the DiffServ architecture, it's possible drawbacks, and related

issues.

3. Multi-protocol Label Switching Architecture

Multi-protocol Label Switching (MPLS) architecture is being standardized by IETF MPLS working group![[*]](foot_motif.png) . MPLS is a forwarding scheme that is based on a label-swapping forwarding (label switching) paradigm instead of standard destination-based hop-by-hop forwarding paradigm [55,120].

. MPLS is a forwarding scheme that is based on a label-swapping forwarding (label switching) paradigm instead of standard destination-based hop-by-hop forwarding paradigm [55,120].

Each packet arriving to an ingress router of an MPLS

domain![[*]](foot_motif.png) is routed, classified, and

given a label

is routed, classified, and

given a label![[*]](foot_motif.png) . Inside the MPLS domain forwarding decisions are

made by using the label instead of processing the packet header and running a routing algorithm. The label is used as an index to a forwarding table

. Inside the MPLS domain forwarding decisions are

made by using the label instead of processing the packet header and running a routing algorithm. The label is used as an index to a forwarding table![[*]](foot_motif.png) where the next hop and a new label can be found. The old label is replaced with the new one and the packet is forwarded. The label is removed as the packet leaves the MPLS domain [68].

where the next hop and a new label can be found. The old label is replaced with the new one and the packet is forwarded. The label is removed as the packet leaves the MPLS domain [68].